Some business layer models are simple, too simple.

You’ve seen it a million times, code separation with a data access project doing the CRUD create + read + update + delete stuff. A model layer that defines a bunch of properties with getter and setter methods and not much else. You then plaster a user interface on top. For exmple, with ASP.Net MVC you find that the controllers do the donkey work of firing up the data access repository to call the data access methods, populate the model and return it to the view.

What’s wrong with this? Well a couple of things.

One, you have a reasonable amount of code in your Controllers, essentially in the UI project. We won’t get much reuse here if we wanted a different UI. We would need to strip that code out somehow into a Class Library project that we can then have a chance of reusing across different UI projects.

Two, what exactly is this model layer actually doing for us? Say we have a Customer class with the usual get and set properties. You will see this model every day I bet, and its just a plain old class object POCO. In an MVC application, I bet the properties are decorated with some attributes. Attributes for some simple validation rules like limiting maximum string lengths, setting number ranges, required fields. This is useful for simple validation in MVC as it can take those attributes and generate client-side JavaScript validation.

But that’s pretty much as advanced as it gets. OK, maybe you can do a tiny bit more validation using a third party validation package like FoolProof. This lets you define dependencies between properties and do some other clever validations that MVC doesn’t do out of the box.

But most systems end up having quite complex business rules, business rules that depend on the state of the object, the state of other related objects and often data tucked away in the data-source (database, web service, third party service).

How do we express those in the model? We really want to, because if we can do that, we have increased the re-usability of our code. Not only that, but we can express ALL our business rules in one place.

“If you build it…”

You need something that provides a good business rules engine, something that helps track the state of your models, something that works with all the various UI technologies (in the Microsoft Stack) and web. That means working with the different data binding techniques WPF, WinForms, MVC, Windows Phone, etc utilize today and in the future. If you want a re-usable business model layer, that’s some plumbing code to write and maintain. Oh, maybe we need it to work with iOS and Android too.

“Stand on the shoulders of giants”.

Now I’m all for writing framework code that makes my life easier. Developers tend to have a box of tricks and common code that travels around with them from project to project and client to client. And in my younger days, writing a framework was a challenge and enjoyable and the open source movement was not like it is today. Today though, its all about being productive and open source delivers a treasure chest of tools and support.

Say hello to my little friend.

Csla.Net is a framework that gives us an intelligent business model layer that provides the things we need to create code that we can write to describe all our rules in the model, even the complex ones. So, what does a typical business model look like?

[Serializable]

public class VehicleEdit : BusinessBase<VehicleEdit>

{

public VehicleEdit()

{

/* normally make this private constructor forces use of factory

methods to create instance, but MVC model binding

needs it public */

}

public static readonly PropertyInfo<byte[]> TimeStampProperty =

RegisterProperty<byte[]>(c => c.TimeStamp);

[Browsable(false)]

[EditorBrowsable(EditorBrowsableState.Never)]

public byte[] TimeStamp

{

get { return GetProperty(TimeStampProperty); }

set { SetProperty(TimeStampProperty, value); }

}

public static readonly PropertyInfo<int> IdProperty =

RegisterProperty<int>(c => c.Id);

public int Id

{

get { return GetProperty(IdProperty); }

set { SetProperty(IdProperty, value); }

}

public static readonly PropertyInfo<string> NameProperty =

RegisterProperty<string>(c => c.Name);

public string Name

{

get { return GetProperty(NameProperty); }

set { SetProperty(NameProperty, value); }

}

//...continued

Here we have a VehicleEdit class. I have called it VehicleEdit rather than Vehicle because it represents a vehicle model that is editable. It inherits from the Csla class BusinessBase which gives it some properties out of the box like IsNew, IsDirty, IsValid, IsSaveable and some methods like BeginEdit, ApplyEdit, SaveChanges, and events.

If I wanted a read-only vehicle model, I would inherit from a ReadOnlyBase class. Csla.Net encourages you to write domain specific models for the use case. So while you could use a VehicleEdit model in a read-only UI page, its better and efficient to use a ReadOnlyBase derived model. Long term, this simplifies the UI code.

The properties look a little different, but what they do here is create backing fields that then get tracked. The GetProperty and SetProperty methods hook into the Csla framework and do a number of things here. They flag the model as dirty if a property changes value, they raise data binding events for your UI, they fire off business rule validation, they check authorization rules (is the current user allowed to view or set this property or model), and the IsValid and IsSaveable properties are updated. All sounds very useful.

This is only the start! This is only starters of a 7 course dinner!

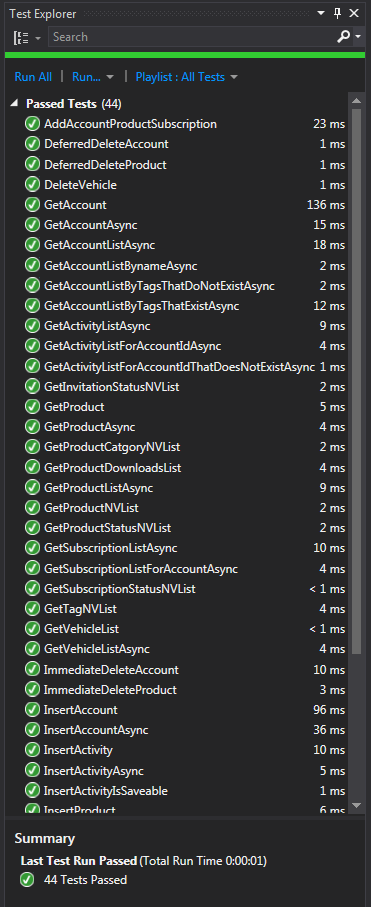

How do we write validation rules? How do we write authorization rules and enforce them? Can we control access down to property level? How to we call the data access code? How do we work with these Csla based business objects in the UI code? How do we deploy in a physical 1-tier, 2-tier, 3-tier model without any code changes? How do we unit test and mock data?

For now, just know that we can elegantly do all of this when using Csla.Net.

I’ll blog more about this soon.

Richard.